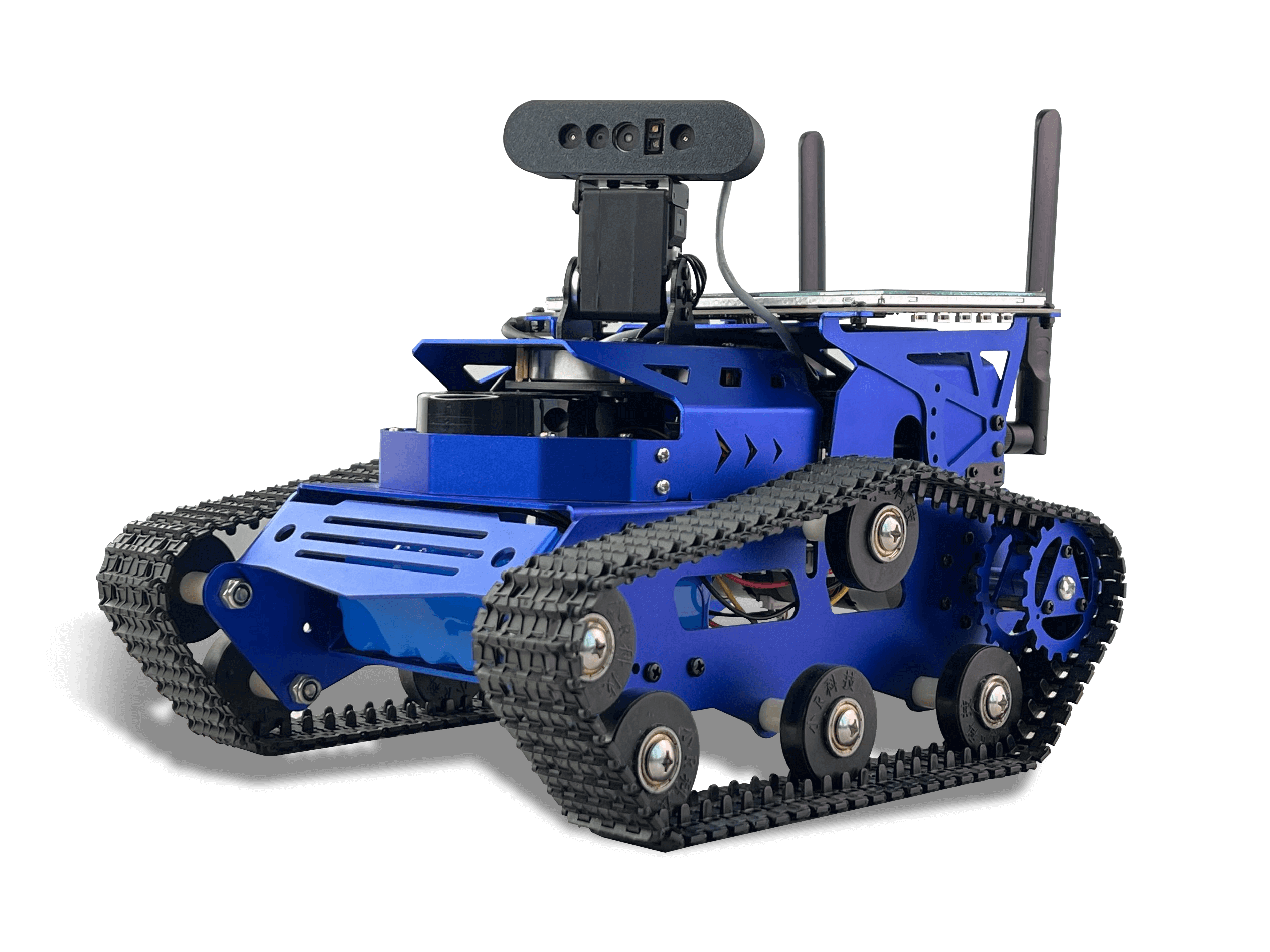

It is based on NVIDIA Jetson Nano and uses the advanced ROS2 framework. It is a professional-grade autonomous navigation car. The car's chassis adopts a crawler structure, giving the robot unparalleled adaptability and stability in various complex terrains. Combining nylon crawlers and high-performance DC motors, it is particularly suitable for scenarios that require precise operations in complex environments, such as logistics, transportation and exploration activities.

In order to meet advanced navigation and task execution needs, ROSHunter is equipped with a series of high-performance hardware. This includes high-torque encoded reduction motors, lidar, 3D depth cameras, 7-inch LCD screens, programmable lights, etc. Integrating these high-performance components not only optimizes the robot's motion control accuracy, but also makes it more efficient when performing complex tasks such as ROS SLAM algorithm mapping, navigation path planning, deep learning, and visual interaction.

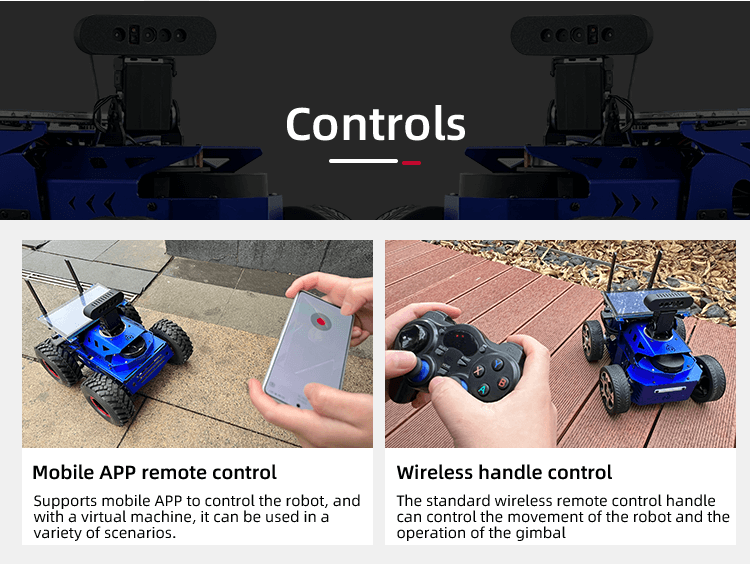

In order to improve the user experience, ROSHunter supports multiple control methods, including mobile APP, virtual machine RVIZ, PS4 controller, etc. These diverse control methods provide users with flexible operation options, making the process from mapping to navigation more convenient. In addition, the complete ROS course provided by XiaoR Technology includes a wealth of technical information, source code, documents and teaching videos, aiming to help users quickly master the core skills of robot development and application.

In addition, ROSHunter is equipped with the "ROS human-computer interaction system" independently developed by XiaoR Technology. It can provide real-time feedback on the robot's operating status, program execution (such as mapping, navigation, map saving, etc.) and system information viewing, further enhancing the robot's user interaction capabilities and practicality. This autonomous navigation robot is not only ideal for technology enthusiasts and researchers, but also an excellent tool for teaching advanced technologies in the education sector.

1. Lidar mapping and navigation - SLAM algorithm mapping such as Gmapping, Karto, and Hector can be developed, and it supports path planning, fixed-point navigation, and multi-point navigation.

2. RTAB-VSLAM 3D visual mapping navigation - The RTAB SLAM algorithm is used to fuse visual and radar data to construct a 3D colourful map. The robot can autonomously navigate and avoid obstacles in the map, and supports global relocation and autonomous positioning functions.

3. Multi-point navigation, dynamic obstacle avoidance - Lidar can detect the surrounding environment in real time and re-plan the path after detecting obstacles.

4. Depth image data, point cloud image - Through the corresponding API, the camera's depth image, colourful image, and point cloud image data can be obtained.

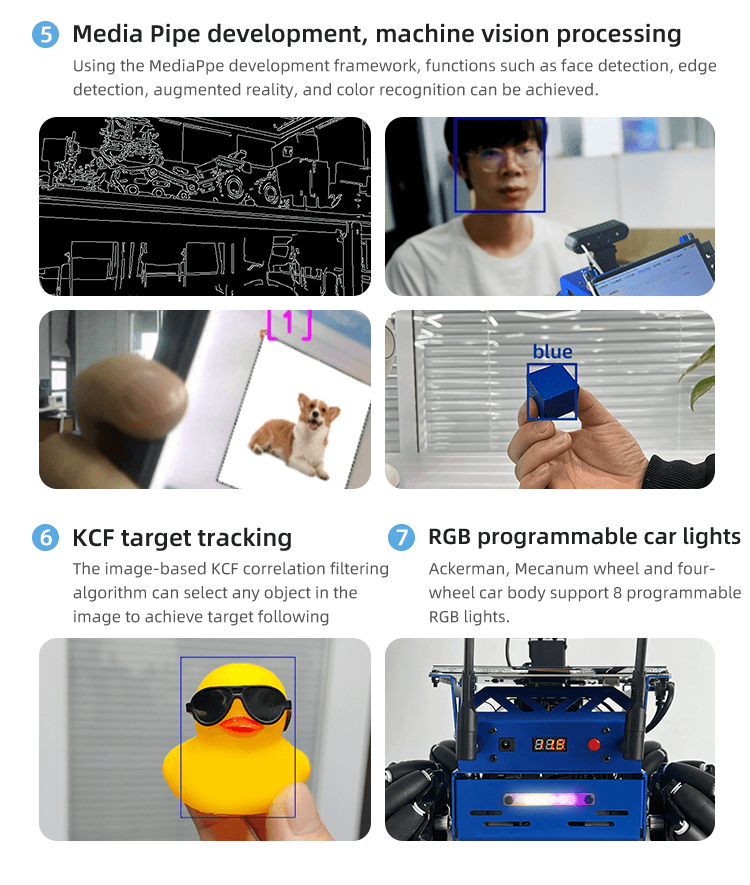

5. Media Pipe development, machine vision processing - Using the MediaPpe development framework, functions such as face detection, edge detection, augmented reality, and color recognition can be achieved.

6.KCF target tracking - The image-based KCF correlation filtering algorithm can select any object in the image to achieve target following.

7. RGB programmable car lights - Ackerman, Mecanum wheel and four-wheel car body support 8 programmable RGB lights.

Remote control method: Mobile APP/Wireless handle/PC

the diagram of tracked car body size

the diagram of tracked car body size