How to use ROS (Robot Operating System) to create and implement AI functions such as mapping and navigation of a radar-programmed robot car involves multiple steps and components. The following explanation uses the XR TH ROS robot car as an example:

1. Hardware selection and assembly

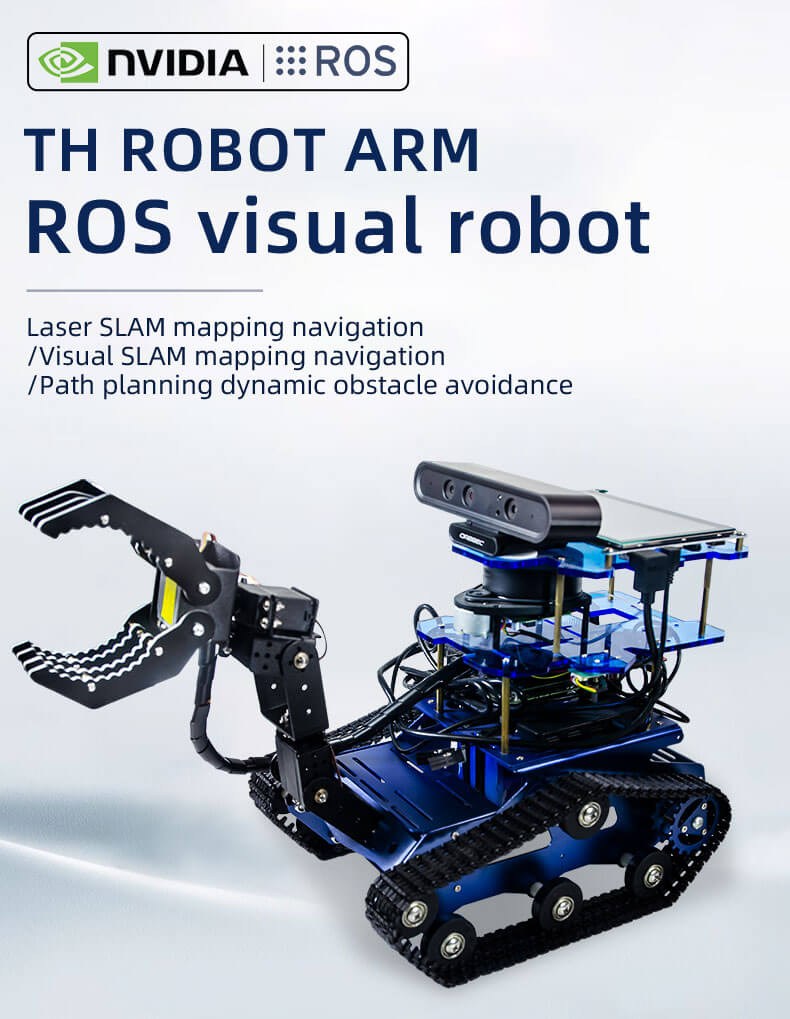

Select hardware: XR TH ROS Robot car uses anodized aluminum alloy as the chassis, Jetson Nano main control board, depth camera, 8400mAh lithium battery with charge protection, DC brush motor (comes with 360-line AB coding), nine-axis gyro Rotator, Rplidar A1 radar, 7-inch 1080p high-definition touch screen (capacitive screen), 4-degree-of-freedom robotic arm, etc.

Assembly: Assemble selected hardware, including installing and connecting motors, sensors, control boards, etc. It can be assembled according to the documentation and guide provided by the hardware XiaoR GEEK.

2. Install ROS

According to your operating system and ROS version, find the installation instructions and documents from the ROS official website, and install ROS. The version used by this product is ROS Melodic.

3. Write or obtain ROS driver

For hardware such as lidar and motors, you may need to write or obtain the corresponding ROS driver so that ROS can communicate with these hardware.

4. Perception and mapping

Lidar mapping: Use lidar mapping packages in ROS (such as gmapping, hector_slam, etc.) to build environmental maps. These packages work by receiving lidar data to estimate the robot's position and attitude and build a 3D map of the environment.

Configuration and tuning: Configure the parameters of the mapping package according to your hardware and scene, and perform necessary tuning.

5. Navigation

ROS navigation stack: Use the navigation stack in ROS (such as move_base) to implement autonomous navigation of the robot. The stack includes modules such as global path planning, local path planning, and motion control.

Configuration and tuning: Configure the parameters of the navigation stack, such as target tolerance, speed limit, etc., and perform necessary tuning.

6. Testing in real environment

Deploy your robot car into a real environment and test mapping and navigation. Make necessary adjustments and optimizations based on test results.

7. Implementation of AI functions

Machine learning/deep learning: The TH ros robot is equipped with a camera, which can not only realize all the AI visual function gameplay of the RGB camera, but also realize the development of more visual functions such as depth image data processing, RTAB three-dimensional visual mapping, etc. Such as face recognition, edge detection, Aruco augmented reality, gesture recognition, KCF target tracking, touch screen operation, visual line patrol, color recognition, etc.

8. Continuous optimization and expansion

Of course, you can also continue to optimize the performance of your robot and expand its functions according to your needs and application scenarios. For example, you can add more sensors to enhance the robot's perception capabilities, or use more complex algorithms to improve mapping and navigation accuracy. When creating and implementing AI functions such as mapping and navigation of a radar-programmed robot car, you may need to consult more ROS documents and tutorials, and refer to relevant communities and forums for help and support.